Source Address Classification - Feature Engineering

Jing Qiao •

Problem background

As we operate the authoritative name servers for the .nz ccTLD (Country Code Top Level Domain), we observe more than 500k unique source addresses sending DNS queries to us every day. According to DNS standards, those addresses should mainly be DNS resolvers acting on behalf of their users.

However, the real world is more complicated. Based on our observations, they're not all DNS resolvers. We've seen some known monitoring hosts constantly probing us for uptime testing. We've also seen some addresses setting Recursion Desired flag in their queries, indicating they're either non-resolvers or misconfigured resolvers. There're also many other addresses we're uncertain about what they are. These non-resolvers produce a lot of noise to our traffic data, which skews our view of the real use of the .nz namespace, and thus undermines the accuracy of our domain popularity ranking algorithm. If we're able to differentiate the resolvers from the non-resolvers, we can assign a higher weight to the traffic from a resolver in the domain popularity ranking algorithm to improve its accuracy.

Unfortunately, it's not easy to identify DNS resolvers, and as far as we know, no one has tried to do this using passive data from an authoritative DNS source.

Retrospect

Aware of this challenge, we started to build a classifier to predict whether a source address is a resolver or not. First, we tried to build a supervised classifier. We collected a sample of known resolvers and monitors for training, and derived 14 features based on 1 day's DNS traffic received by the nameservers in NZ. Though the classifier didn't detect many non-resolvers as we expected, we realized our training data was bias by not including representative samples of other patterns, which caused the model to be overfitting. So we turned to the unsupervised method to learn the inherent structure of the data to discover more hidden patterns. The efforts of training the supervised and unsupervised classifier were presented together in "In the search of resolvers", OARC 25.

The unsupervised model showed some interesting patterns and performed well by clustering together most of the known resolvers, but had a problem of mixing some monitors with resolvers in the same cluster. The reason could be that our feature set was not enough to differentiate some monitors from resolvers, or 1 day's data is not enough for the discrimination. So we extended the data to a longer period of time, 92 days, and looked for more features that could help capture the underlying differences. We ended up with a new clustering model with 30 features documented in "Understanding traffic sources seen at .nz", OARC 27.

Recently, we've been extending our sample set, doing a lot of feature engineering and got further progress on improving the unsupervised clustering. This post will focus on the feature engineering part and a second post in the near future will explain the clustering efforts.

Feature engineering is key

In a machine learning practice, you need to define a set of features that's fed into a machine learning algorithm to let it learn and predict. A good feature set often determines your success in practice. But the signals are often hidden in the raw data and implicit features need to be found by human intuition and domain knowledge. So feature engineering is recommended to be the critical part to put the effort on to succeed in a machine learning practice.

" Coming up with features is difficult, time-consuming, requires expert knowledge. Applied machine learning is basically feature engineering.

" - Andrew Ng

You might like to take a deeper look at feature engineering in this post.

Initial set of features

We have DNS queries and responses captured and saved in Hadoop tables for analysis. Each query record contains most fields in a DNS query packet, such as the source IP address, timestamp, query name, query type, TTL(Time To Live), DNS flags, and etc. From a given source address, we can build a query stream with the response status, from which we extract and derive relevant features that can help characterize the query pattern and capture the uniqueness discriminating it from those of other types of source address.

You may want to refer to "DNS in a Glimpse" in my former blog for more about DNS query types and DNS response codes.

We initially brought up with 14 features, which can be categorized into 3 groups:

-

Fraction of unique query types could have different distribution for a different type of source address. For example, a resolver is likely to send a significant amount of type A queries asking for IPv4 addresses and also a certain amount of other query types, such as AAAA, MX, DS for doing validation, and etc., while a monitor or some tool, checking whether our server is running, probably only send queries for the same query type again and again.

-

Fraction of response codes can be useful when recognizing source addresses generating mostly NXDOMAIN (Domain doesn't exist) or REFUSED by the server, which is abnormal.

-

Fraction of DNS flags can also be useful features. For example, a normal resolver shouldn't set RD (Recursion Desired) bit in its queries to an authoritative name server.

Time factor

The dataset we're analyzing, the DNS query stream, is a time series. We need to consider the temporal behavior in our feature engineering. We've started recording the data since 2012 for the .nz name servers located in New Zealand. We just had access to additional locations hosted by CIRA. Now our dataset covers about 80% of the total .nz traffic.

We select a time window across 4 weeks in which we can observe a source address's daily behavior, weekday and weekends pattern, and profile in the whole period.

For daily pattern, we aggregated the query data on each day and calculated the amount of query types, domain names, query names, total queries, queries per domain, to produce multiple time series for a source address. For the time series classification and clustering, this article contains some interesting ideas and practices for reference. The most common and simple way is to summarize each time series using descriptive statistics like mean and percentiles.

For capturing the weekday versus weekends pattern, we calculated the fraction of weekday versus weekends in the total number of visible days for each source address.

For the whole period, we aggregated the 14 initial features. We also calculated the visible days and hours of each source address, which capture the activity of the source address during this period.

Data cleaning

I have to mention this as it's an important step to reduce the noise before feeding the data into the classification model. By domain knowledge, we defined some criteria that help filter out the addresses almost certainly not resolvers, and the instances with a very small number of data points making it not representative. For example, source addresses queried for the same domain during the whole 28-day period were removed, as a DNS resolver is not likely to query for only 1 domain in that long period. The source addresses only active for 1 day during the period or sending less than 10 queries per day were also removed, as they didn't present enough data in this period. By applying these conditions, we reduced our dataset from 2M to 500k unique source addresses.

To further remove noise, we selected the instances for source addresses with high visibility, thus reducing to 82k unique sources. We assume a source address with a high visibility in the 28-day period is very likely to be an active resolver or a monitor. Now we're going to find more features relevant to differentiating an active resolver from a monitor.

Entropy

Inspired by this article, we came up with the idea of using entropy to measure the amount of information in the DNS query flow from a source address. By instinct, a resolver, as the proxy of its real users, should have more randomness in its query flow. On the other hand, a monitor, which is programmed or triggered in a certain way and thus should be more deterministic.

From this point of view, we thought of two aspects that would affect the entropy of a source address, the inter-arrival time and the similarity of query names between consecutive queries. For the inter-arrival time, we calculated the inter-arrival time between two consecutive queries, and the inter-arrival time between two consecutive instances of the same query (Two queries are the same when they contain identical query name and query type). For the query name, we calculated the similarity of the query names between two consecutive queries using Jaro Winkler string distance.

Variability

We also came up with variability as a way to measure the difference between an active resolver and a monitor. A monitor's query flow is likely to be stable across time, and a resolver, on behalf of its users, should be more random in its queries. We found some variance metrics, such as IQR, QCD, Mean Absolute Difference, Median Absolute Deviation, CV, powerful to quantify the variability of a set of data.

We first partitioned the whole period by the hour, and aggregated the time series features per hour, as well as calculated the mean entropy in each hourly window. Then we use the aforementioned variance metrics to calculate the variability of these aggregated features across the hour tiles.

Feature selection

After the feature brainstorming and exploration above, we come up with 66 features in total. They might not be all good features though. Redundant and irrelevant features will introduce noise into the dataset, thus could decrease the accuracy of the model.

First, we did a redundancy check on our feature set by computing the correlations among the features. 12 features with above 0.95 correlation were removed.

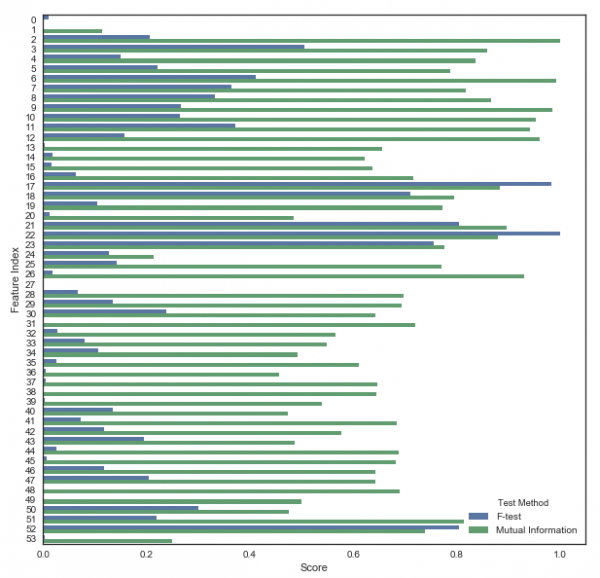

Within the 82k source address to work with, we have 558 labeled resolvers and 82 monitors. It's possible to explore which features are most relevant to the label value. We tried univariate feature selection with different statistical tests, such as F-test and Mutual Information (MI).

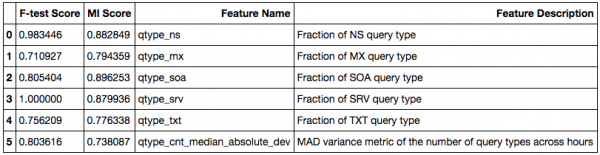

As illustrated in this example, mutual information method can capture any kind of statistical dependency while F-test captures only linear dependency. In the above plot, some features get a low score in F-test but a high score in MI test, indicating they have a non-linear relationship with the target.

Diving into the features with high scores in both F-test and MI, we can find, features around the fraction of query types beyond A, AAAA, DS and DNSKEY, and the variability of the number of unique query types across hours play a predominant role in demarcating the resolvers and monitors in our labeled data.

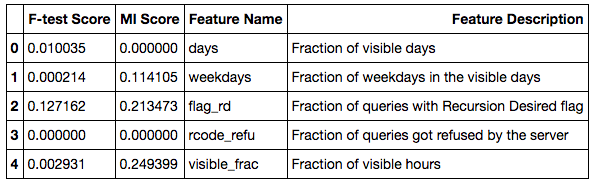

On the other hand, features showing low scores in both metrics, such as the fraction of visible days and hours, the fraction of weekdays versus weekends, the fraction of the response code of REFUSED and the fraction of the Recursion Desired DNS flag got a very low score in both tests. This is as expected as we filtered out the low visibility instances which make the visibility features less meaningful. And Recursion Desired flag and Refused response code seem not significant in our sample data to help differentiate resolvers and monitors, but could be useful features in another scenario, for example, anomaly detection.

In addition, we can try a different feature selection algorithm, for example, the algorithm that evaluates the coeffect of multiple features such as Recursive feature elimination. We can also rank the features on the clustering result to help comprehend and interpret the model, which will be introduced in a future post.

Conclusion

Feature engineering is hard. It's where the domain knowledge and creativity show the power. We put a lot of effort into it and got more and more good features that really improved the clustering result. Thanks Sebastian for providing great ideas on the entropy and variance metrics. Through feature engineering, we not only find the way to improve our domain popularity ranking algorithm, but also got a deeper understanding of our data, and learned lots of techniques that further boost our expertise. Later I will write something about the clustering model trained on these features.